Your test looks great after three days. Stop or keep going? If you stop too early, the “win” can vanish after launch. If you run too long, you waste time while bigger ideas wait. The right answer comes from one rule:

In ASO A/B tests, runtime depends on the number of visitors per variant, not on the calendar.

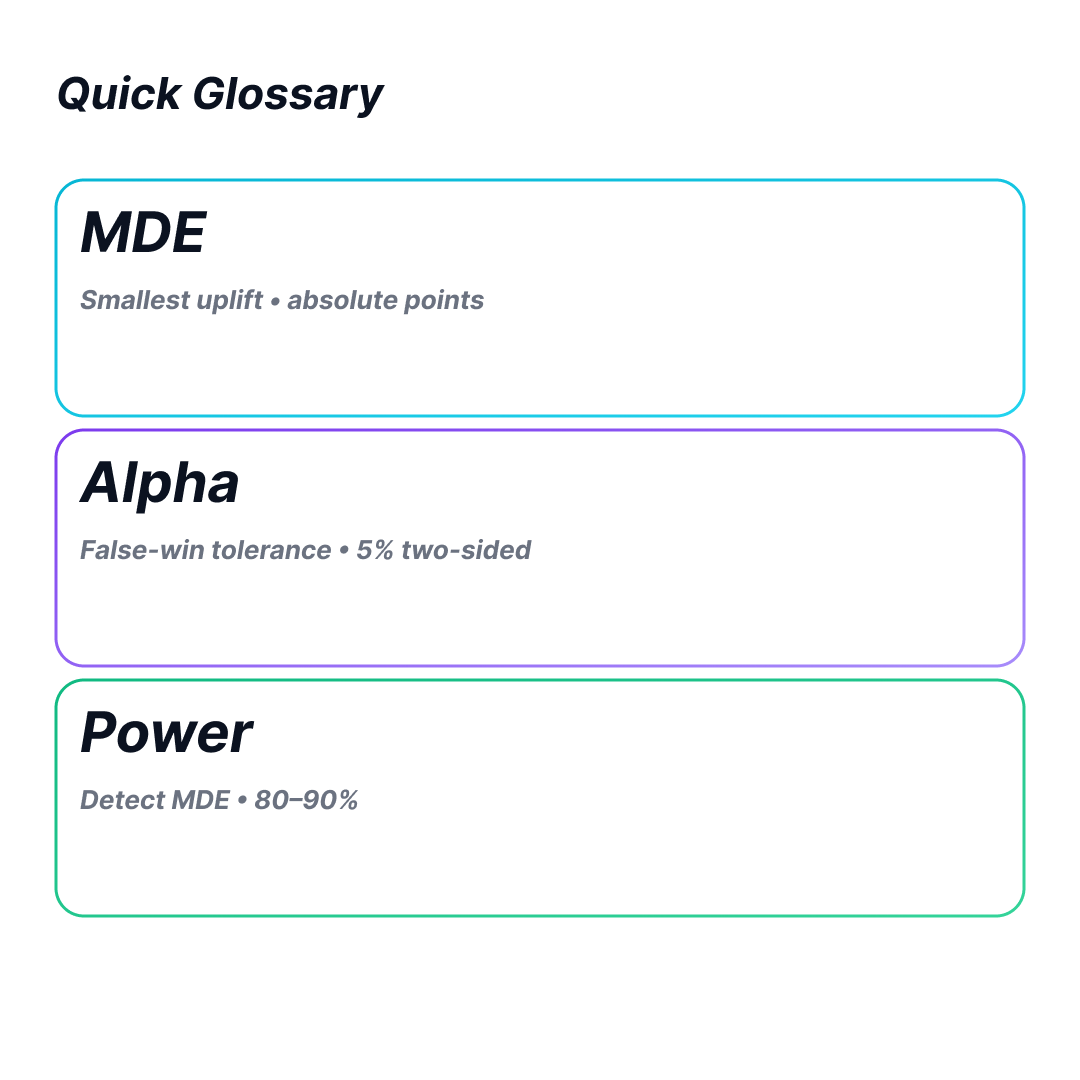

Quick Glossary

- MDE — the smallest uplift that would change a plan or budget, stated in absolute points.

- Alpha — your tolerance for a false win. A common setting is 5% and two-sided (you check for both lift and drop).

- Power — the chance your test will detect the MDE if it is real. 80% is common; 90% is stricter.

- Visitors per variant — how many unique visitors each version must see before you can judge it.

What Really Sets Test Length

Length comes from two things: the effect you care about and the traffic each variant gets. Smaller effects need more data. More variants split traffic and slow everything. Fewer variants finish faster and give cleaner reads.

Make Results Trustworthy Before You Start

Pick one primary metric. In ASO, conversion to install is the usual choice. Keep the test scope tight: stable markets, stable channels, and a fixed traffic split. Track installs with reliable attribution. Write guardrails in your brief: exclude broken days, outages, and heavy promos from analysis. Commit to a clear stop rule before launch.

Choose an Effect Size that Matters

MDE is the smallest uplift worth acting on. Big creative changes can justify a larger MDE and shorter runs. Small copy or color tweaks often need a smaller MDE and longer runs. Speak in absolute points for clarity: “12% to 15% is +3 points.” If traffic is thin, focus on bold ideas first.

Pick Your Confidence Level (Alpha and Power)

Alpha controls false positives; power controls missed true wins. A practical default is 5% two-sided alpha and 80% power. Tighter alpha or higher power both raise the visitor target and extend the run. Use stricter settings when the stakes are high and the extra time is worth it.

Turn Required Visitors into Calendar Days

Once you know how many visitors each variant needs, convert that into days using your actual daily traffic per variant. Keep whole weeks in mind, because weekday and weekend behavior can differ a lot.

Mini-case. Your app gets about 10,000 store page visits per day. You run two variants with a 50/50 split, so each gets ~5,000 visits per day. Your planning says each variant needs ~15,000 visits. The raw minimum is three days. Still, you keep the test for a full week to include a weekend. If you add a third variant, each gets ~3,333 visits per day, so the same target would take longer. This is why fewer variants finish faster.

Looking Early without Fooling Yourself

Unplanned peeking raises the chance of a false win. If you must look early, schedule interim checks in advance and use a method that controls error across those looks. If your tools do not support monitored testing, do not peek. Follow your stop rule.

Many Variants, Little Traffic

Every extra variant thins traffic. Two cells are fastest. If you need to explore, use stages: screen several ideas quickly, pick a finalist, then confirm head-to-head. If you judge many variants at once, control for multiple comparisons. Do not crown the first “green” cell without that control.

Cut Noise to Shorten Runs

Keep traffic splits stable by country and channel. Avoid big promos and features during the run. If your stack supports it, adjust for past levels or source mix to reduce noise. Treat these tools as accelerators, not as requirements.

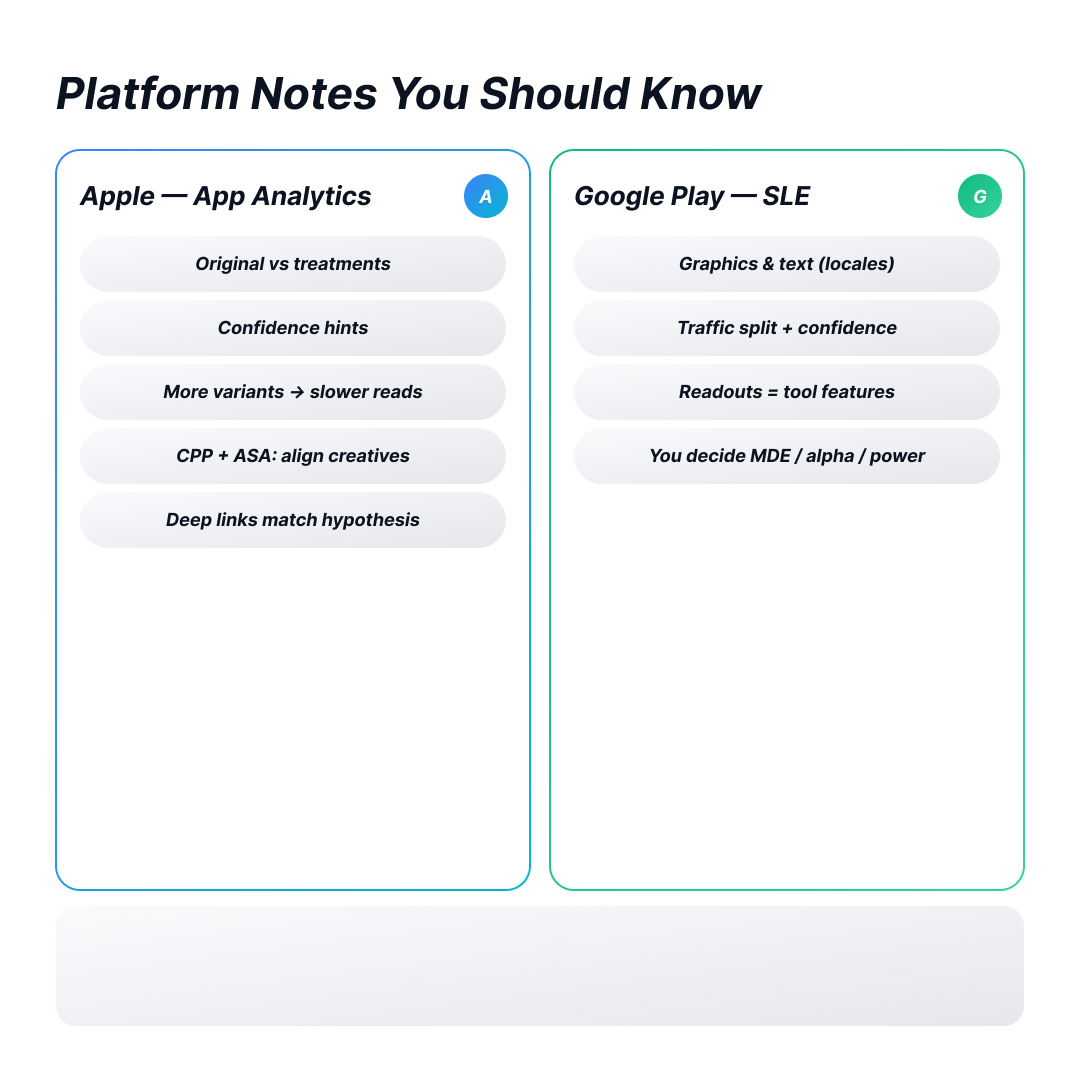

Platform Notes You Should Know

Apple. In App Analytics, you can test the original page against several treatments. Apple shows confidence-based messages to guide you. More treatments can slow time to a clear result. If you use Custom Product Pages with Apple Search Ads, align creatives and deep links with the test hypothesis, so paid and organic behavior tell the same story.

Google Play. Store Listing Experiments let you test graphics and text, including localizations. The Console manages traffic splits and shows confidence. Treat those readouts as features of the tool. They do not replace your decisions on MDE, alpha, and power.

The math is platform-agnostic. Operations are not. Always read the fine print on routing, timing, and how results are labeled.

Planning Hints by Asset Type

Icons often produce larger effects and faster calls. Even with a quick read, hold at least a full week to include weekends. Screenshots tend to settle slower than icons; lead with a clear value proposition in the first frame. Videos stabilize slowest; the opening seconds matter the most.

Watch Outside Forces

Category rank shifts, competitor launches, features, OS updates, and seasonality can move your baseline. Track these during the run. If you cannot avoid a volatile period, record it in the brief and read results with that context in mind.

When to Stop and When to Extend

Stop when each variant has its required visitors, the result is significant at your alpha, and daily results look stable across a full week. Extend if you are close to the target and trends are borderline, if weekdays and weekends disagree, or if guardrails conflict with the main metric. A flat result still helps: roll back, set a larger MDE for the next try, or refocus on higher-traffic markets.

A Simple Workflow that Scales

Write a one-page brief for every test. Include the hypothesis, asset changes, MDE, alpha, power, required visitors per variant, markets, traffic split, guardrails, and the stop rule. Launch early in the week. Review on a weekly cadence. If you win, roll out and monitor. If you lose, bank the learning and move to a stronger idea. This keeps ASO tests both fast and trustworthy.

FAQ

How long should an ASO test run?

Until each variant reaches the visitor target implied by your MDE, alpha, and power, and you have stability across a full week.

What MDE should we choose first?

Pick the smallest uplift that would change a plan or budget. Smaller MDE means more visitors and longer runs.

Can we stop early when results look great?

Only if you planned interim looks and use a method that controls error across them. If not, you raise false positives.

How many variants should we run?

Fewer is faster. If you must test many ideas, stage them: screen first, then confirm head-to-head.

Conclusion

The right length for an ASO A/B test is the time it takes to feed each variant the required number of visitors for your chosen MDE, alpha, and power. Then round to whole weeks so weekday-weekend patterns do not mislead you. Choose an effect size that matters, keep variants few enough to feed traffic, protect runs from promo noise, and follow the stop rule. That is how you move fast and still trust what you ship.